Artificial Intelligence (AI) has transformed the way we access information, with AI-powered search engines and AI assistants becoming essential tools for navigating the digital world. These systems rely on vast datasets drawn from websites, social media platforms, and user-generated content to provide accurate and relevant responses. However, this dependence on diverse and often unverified data sources creates significant vulnerabilities. Hackers can exploit these AI systems by injecting fake news and manipulated content into the information ecosystem, skewing AI outputs and potentially causing widespread societal, political, and economic consequences. The growing sophistication of AI-driven misinformation campaigns amplifies these risks, making it critical to understand how such exploits occur and their potential impact.

The rapid spread of fake news through social media, combined with advanced techniques like deepfakes and coordinated inauthentic behavior, poses a unique threat to AI search systems. Malicious actors can manipulate the data these systems rely on, tricking them into prioritizing false narratives over accurate information. This not only undermines the trustworthiness of AI responses but can also trigger panic and chaos, from public health scares to financial market disruptions. As AI becomes more integrated into daily life, the stakes of such manipulations grow, necessitating robust defenses and heightened user awareness to mitigate their impact.

How AI Search Systems Work

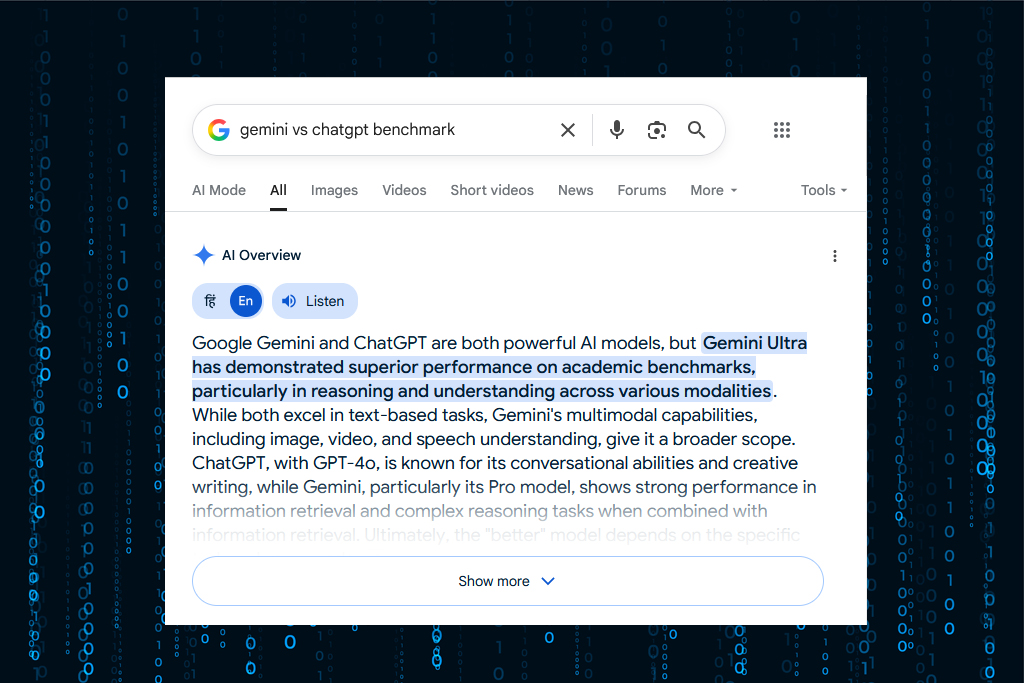

AI search systems and assistants process queries by analyzing patterns in data, often sourced from websites, social media platforms, and user-generated content. They use machine learning (ML) algorithms to identify relevant information, rank it based on credibility, and generate responses. Systems like mine employ techniques such as natural language processing (NLP) and web crawling to ensure accuracy. However, these systems are not infallible. Their outputs depend heavily on the quality and integrity of the data they process, making them susceptible to manipulation if hackers flood their sources with fake news.

Mechanisms of AI Exploitation through Fake News

Hackers exploit AI search systems by manipulating the data they rely on, particularly through fake news and social media. Below are key mechanisms:

1. Data Poisoning

Data poisoning involves injecting false or misleading information into the datasets used to train or fine-tune AI models. By flooding websites, forums, or social media with fake news, hackers can skew the AI’s perception of reality. For instance, coordinated campaigns on platforms like X, Facebook and Threads, can amplify fabricated stories, making them appear credible due to their volume and apparent engagement. If these stories dominate the data pool, the AI may inadvertently treat them as factual, leading to biased or incorrect responses.

2. Deepfakes and Synthetic Media

Generative AI enables the creation of deepfakes—realistic but fabricated audio, video, or text content. Hackers can use deepfakes to spread false narratives, such as a fake video of a public figure making inflammatory statements. These can be disseminated via social media, where they gain traction before being indexed by AI search systems. For example, a 2019 deepfake video alleging a Malaysian political aide’s misconduct destabilized a coalition government, demonstrating the political impact of such manipulation.

3. Social Media Amplification

Social media platforms are fertile ground for spreading fake news due to their vast reach and rapid dissemination. AI-driven bots can create fake accounts, generate likes, and amplify misleading posts to mimic organic engagement. For instance, the “Doppelgänger” network, a Russia-linked operation, used AI to craft deceptive news articles and fake social media accounts to influence audiences in the U.S. and Germany. Such campaigns can trick AI systems into prioritizing manipulated content, especially if it appears popular or credible.

4. Keyword Manipulation and SEO Abuse

Hackers exploit search engine optimization (SEO) techniques to boost the visibility of fake news sites. By targeting specific keywords, they ensure their content ranks highly in AI search results. Automated web scrapers can rewrite articles to evade plagiarism detection, creating seemingly unique stories that align with a malicious narrative. This tactic was noted in a NewsGuard investigation, where AI-generated fake news sites produced thousands of articles daily, amplifying polarizing content.

5. Coordinated Inauthentic Behavior (CIB)

CIB involves orchestrated campaigns where fake accounts work together to spread disinformation. For example, a Russian bot farm used AI to create “souls”—fake accounts with realistic biographies and profile pictures—to influence public opinion on X. These accounts can post content, comment, and build followers, making their narratives appear legitimate to AI systems that rely on engagement metrics.

Possibilities of AI Search Manipulation

The possibilities for exploiting AI search systems are vast and evolving, driven by the accessibility of AI tools and the interconnected nature of digital platforms. Key possibilities include:

1. Political Manipulation

Hackers can manipulate AI search responses to sway public opinion during elections or geopolitical events. For instance, fake news sites targeting the 2024 U.S. elections used AI to generate articles amplifying divisive narratives, such as anti-LGBTQ+ sentiments or critiques of military policy. By flooding social media with such content, hackers ensure AI systems index and potentially prioritize it, influencing voters and eroding trust in democratic processes.

2. Financial Market Disruption

Fake news can destabilize financial markets by spreading false narratives about companies or economic events. A deepfake video claiming a Pentagon explosion caused temporary stock market panic, illustrating the potential for economic disruption. Sophisticated attacks could manipulate trading algorithms, which often rely on AI to analyze news sentiment, leading to massive losses.

3. Social Engineering and Phishing

AI-generated phishing emails or social media posts, enhanced by behavioral analysis, can trick users into revealing sensitive information. Cybercriminals use AI to craft personalized messages that exploit psychological vulnerabilities, such as urgency or authority. For example, AI-powered bots can analyze social media data to create convincing scams, increasing the success rate of phishing campaigns.

4. Critical Infrastructure Attacks

AI systems managing critical infrastructure, like power grids or transportation, can be misled by manipulated data. Hackers could inject false sensor data or fake news about infrastructure failures, causing AI systems to make faulty decisions, such as shutting down power grids or rerouting traffic, leading to widespread disruption.

5. Reputational Damage

Businesses and individuals are vulnerable to deepfake-driven smear campaigns. Fake customer testimonials or videos alleging faulty products can damage brands, as seen in cases where companies faced losses due to fabricated content. AI search systems amplifying such content can exacerbate reputational harm, as users encounter it in search results.

Mitigation Strategies

Combating AI search manipulation requires a multifaceted approach:

1. Enhanced AI Detection

Developers are creating AI-powered tools to detect deepfakes and fake news, using neural networks to identify anomalies in media. Social media platforms can integrate these tools to flag manipulated content before it reaches AI search systems.

2. Media Literacy Education

Educating users to recognize fake news and deepfakes is critical. Experts suggest watching for “red flags” like odd grammar or inconsistent media elements. Public campaigns, like the 2018 Buzzfeed deepfake of Barack Obama, can raise awareness about disinformation risks.

3. Regulatory Frameworks

Governments can enforce transparency in content creation and dissemination. The European Commission’s 2018 report recommends self-regulatory approaches to improve transparency and promote media diversity, reducing the impact of fake news.

4. Platform Accountability

Social media platforms must strengthen efforts to identify and remove fake accounts. The takedown of a Russian bot farm on X demonstrates the effectiveness of proactive moderation, though challenges remain due to the scale of AI-driven content.

5. Robust Data Filtering

AI systems can improve data filtering by prioritizing verified sources and cross-referencing information.

FAQs

1. How can fake news affect AI search results?

Fake news can skew AI search results by dominating the data pool with misleading information. If hackers flood social media or websites with fabricated content, AI systems may index and prioritize it, especially if it appears credible due to high engagement or SEO manipulation, leading to inaccurate or biased responses.

2. What role does social media play in AI search manipulation?

Social media platforms amplify fake news through rapid dissemination and fake accounts. Coordinated campaigns, like those using AI-driven bots, can create the illusion of credibility, tricking AI systems into ranking manipulated content higher in search results.

3. Can deepfakes really trick AI systems?

Yes, deepfakes can deceive AI systems if they are realistic and widely shared. AI search engines may index deepfake content as legitimate, especially if it gains traction on platforms like X, leading to the spread of false narratives in responses.

4. What kind of panic can manipulated AI search results cause?

Manipulated AI search results can trigger public panic (e.g., over fake health crises), political instability (e.g., election interference), economic disruption (e.g., market crashes), organizational chaos (e.g., fake data breaches), and national security threats (e.g., false military reports).

5. How can users protect themselves from manipulated AI search results?

Users should cross-check AI search results with primary sources, be skeptical of sensational claims, and look for inconsistencies in media. Reporting suspicious content to platforms and using media literacy skills, like checking for odd grammar or unnatural visuals, can also help.

6. Are there technologies to detect AI search manipulation?

Yes, AI-powered tools can detect deepfakes and fake news by analyzing anomalies in text, audio, or video. Platforms are increasingly integrating these tools to flag manipulated content, though no system is foolproof.

7. What can platforms like X do to prevent AI search exploitation?

Platforms can enhance moderation to remove fake accounts, use AI to detect coordinated inauthentic behavior, and prioritize verified sources. Regular takedowns of bot networks, like the Russian bot farm on X, help reduce the spread of fake news.

8. Can AI systems be made immune to fake news manipulation?

No system is completely immune, but AI can be improved with robust data filtering, cross-referencing verified sources, and continuous updates to detect and mitigate fake news. User feedback also helps refine system accuracy.

Conclusion

The exploitation of AI search systems through fake news and social media poses significant risks, from public panic to national security threats. Hackers leverage data poisoning, deepfakes, and coordinated campaigns to manipulate AI responses, exploiting the trust users place in these systems. The possibilities for harm are vast, including political manipulation, financial disruption, and infrastructure chaos, each capable of triggering widespread panic. Mitigation requires advanced detection, media literacy, regulatory action, and platform accountability. As AI continues to evolve, so must our defenses against its misuse. Users should adopt a “trust but verify” approach, critically evaluating AI outputs and reporting suspicious content to refine system accuracy. By staying vigilant, we can mitigate the impact of these exploits and preserve the integrity of AI-driven information systems.