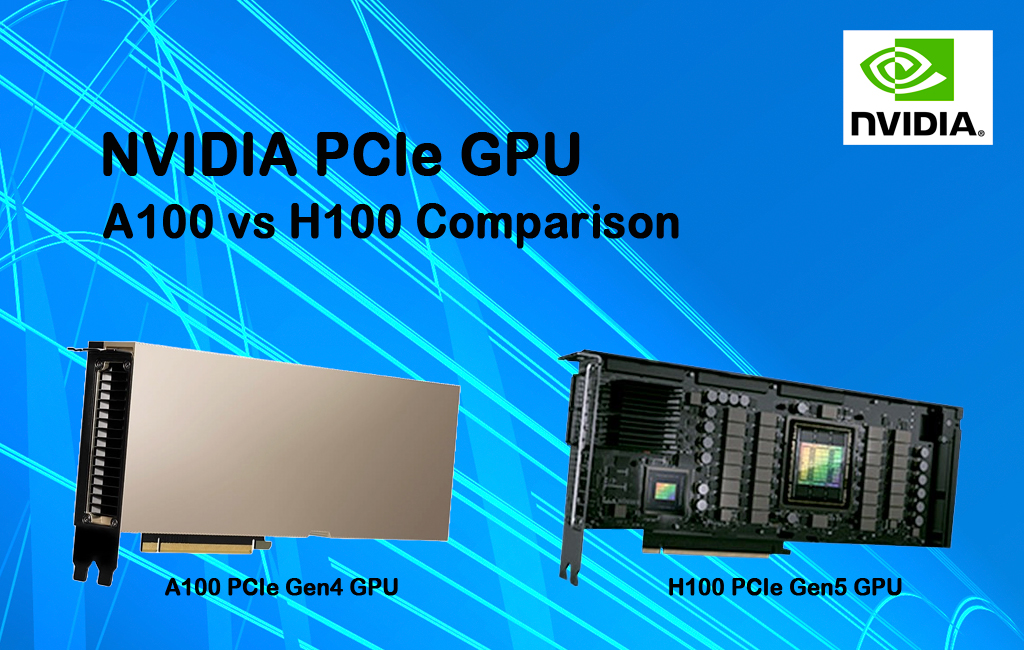

The NVIDIA A100 and H100 PCIe GPUs represent the pinnacle of data center accelerators, designed to power the next generation of artificial intelligence (AI), machine learning (ML), high-performance computing (HPC), and data analytics workloads. Built on NVIDIA’s Ampere and Hopper architectures, respectively, these GPUs deliver exceptional computational power, catering to enterprises, research institutions, and cloud providers. The A100, introduced in 2020, is renowned for its versatility and cost-efficiency, while the H100, launched in 2022, pushes the boundaries with cutting-edge features like the Transformer Engine and FP8 precision, tailored for large-scale AI models and demanding HPC tasks. This article compares their specifications, pricing, performance benchmarks, and use cases to guide decision-makers in selecting the right GPU for their needs.

As AI and HPC demands continue to surge, choosing between the NVidia A100 GPU and NVidia H100 PCIe GPU involves balancing performance, cost, and specific workload requirements. The A100’s proven track record and lower price point make it a go-to choice for organizations with diverse or budget-conscious workloads, while the H100’s superior performance and advanced capabilities position it as the ideal solution for cutting-edge applications like large language model (LLM) training and real-time inference. By examining their technical differences, cost structures, and performance metrics, this article provides a comprehensive analysis to help organizations optimize their infrastructure investments for both current and future computational challenges.

Technical Specifications Comparison

| Feature | NVIDIA A100 PCIe (80GB) | NVIDIA H100 PCIe (80GB) |

|---|---|---|

| Architecture | Ampere | Hopper |

| GPU Memory | 80GB HBM2e | 80GB HBM3 |

| Memory Bandwidth | 2 TB/s | 3.35 TB/s |

| CUDA Cores | 6,912 | 14,592 |

| Tensor Cores | 432 (3rd Gen) | 456 (4th Gen) |

| FP64 TFLOPS | 9.7 | 33.5 |

| FP32 TFLOPS | 19.5 | 67 |

| TF32 Tensor Core TFLOPS | 156 (312 with sparsity) | 378 (756 with sparsity) |

| FP16 Tensor Core TFLOPS | 312 (624 with sparsity) | 756 (1513 with sparsity) |

| FP8 Tensor Core TFLOPS | N/A | 1979 |

| Interconnect | PCIe Gen 4 (64 GB/s bidirectional) | PCIe Gen 5 (128 GB/s bidirectional) |

| NVLink Bandwidth | 600 GB/s (3rd Gen) | 900 GB/s (4th Gen) |

| Thermal Design Power (TDP) | 300W | 350W |

| Form Factor | PCIe (full-length, dual-slot) | PCIe (full-length, dual-slot) |

The H100 PCIe offers significant advancements over the A100, including double the CUDA cores, higher memory bandwidth with HBM3, and support for FP8 precision, which is absent in the A100. The H100 also benefits from PCIe Gen 5 and fourth-generation NVLink, providing faster data transfer and multi-GPU communication. However, these improvements come with a higher TDP, requiring more robust cooling solutions.

Price Comparison A100 vs H100 PCIe GPU

Pricing for the NVIDIA A100 and H100 PCIe GPUs varies based on configuration, vendor, and whether purchased outright or through cloud providers. Below is an overview based on 2025 market data:

- NVIDIA A100 80GB PCIe: Estimated retail price ranges from $10,000 to $13,000, depending on the seller and whether the unit is new or refurbished. Cloud pricing starts at approximately $1.35–$1.80 per hour (e.g., Hyperstack: $1.35/hour, Ori: $1.80/hour).

- NVIDIA H100 80GB PCIe: Retail pricing is typically higher, often exceeding $15,000 due to its newer architecture and higher demand. Cloud pricing ranges from $2.40–$3.50 per hour (e.g., Hyperstack: $2.40/hour, Jarvislabs.ai: $2.99/hour, Ori: $3.08/hour). Recent market trends show H100 cloud pricing dropping from $8/hour to $2.85–$3.50/hour due to increased availability.

While the H100 is approximately 71% more expensive per hour in cloud environments, its superior performance can offset costs for time-sensitive workloads by reducing training and inference times. For example, training a large language model (LLM) on H100s can be up to 39% cheaper overall due to 64% faster training times compared to A100s.

Performance Benchmarks A100 Vs H100

AI Training

- H100 PCIe: Benchmarks show the H100 delivers 2–4x faster training performance than the A100 for large-scale AI models, particularly transformer-based models like GPT-3 (175B parameters). The H100’s Transformer Engine and FP8 precision enable up to 9x faster training in some cases, with MLPerf benchmarks indicating a 3x speedup for BERT-Large training. The higher memory bandwidth (3.35 TB/s vs. 2 TB/s) supports faster weight updates and larger batch sizes.

- A100 PCIe: The A100 remains highly capable, offering robust performance for training neural networks in computer vision, speech recognition, and NLP. It achieves 312 TFLOPS for FP16 tensor operations, sufficient for many AI workloads but slower than the H100 for large-scale models.

AI Inference

- H100 PCIe: The H100 excels in inference, delivering 1.5–2x faster performance than the A100, with up to 30x speedups for LLMs due to its Transformer Engine and FP8 support. For models like Llama 2 (70B parameters), the H100 achieves 250–300 tokens per second compared to the A100’s 130 tokens per second, enabling higher throughput and lower latency.

- A100 PCIe: The A100 is effective for inference tasks like image classification, recommendation systems, and fraud detection, but its lack of native FP8 support limits efficiency for transformer-based models compared to the H100.

HPC Workloads

- H100 PCIe: The H100’s superior FP64 performance (33.5 TFLOPS vs. 9.7 TFLOPS) makes it ideal for scientific simulations, weather forecasting, and drug discovery. Its higher memory bandwidth and NVLink 4.0 enhance performance in tasks like 3D FFT and lattice QCD.

- A100 PCIe: The A100 is well-suited for HPC tasks, offering strong FP64 performance and compatibility with multi-GPU setups. It remains a cost-effective choice for less demanding HPC workloads.

Power Efficiency

- H100 PCIe: Despite a higher TDP (350W vs. 300W), the H100 is more power-efficient, achieving 8.6 FP8/FP16 TFLOPS/W compared to the A100’s lower efficiency. This makes the H100 more cost-effective per watt for optimized AI workloads.

- A100 PCIe: The A100’s lower TDP makes it preferable in power-constrained environments, but it is less efficient for FP8-based tasks due to its lack of native support.

Use Cases

NVIDIA A100 PCIe

The A100 PCIe is a versatile, cost-effective option for organizations with diverse or less demanding workloads:

- AI Model Training and Fine-Tuning: Ideal for training deep learning models in computer vision, speech recognition, and NLP, especially for smaller-scale models or budget-conscious projects.

- AI Inference: Suitable for inference tasks like image classification, recommendation systems, and fraud detection, where high throughput is needed but not at the scale of cutting-edge LLMs.

- HPC Tasks: Effective for scientific simulations, genome sequencing, and data analytics, particularly in environments leveraging MIG for resource partitioning.

- Cloud Computing: Widely adopted in cloud platforms like Microsoft Azure for mixed workloads, offering robust performance and compatibility with existing infrastructure.

NVIDIA H100 PCIe

The H100 PCIe is tailored for high-performance, large-scale AI and HPC workloads:

- Large-Scale AI Training: Optimized for training massive LLMs (e.g., GPT-4, BERT) and vision transformers, leveraging the Transformer Engine and FP8 precision for faster training times.

- AI Inference for LLMs: Excels in real-time applications like conversational AI, real-time translation, and retrieval-augmented generation (RAG), offering higher token generation rates and lower latency.

- Advanced HPC: Ideal for computationally intensive tasks like lattice QCD, 3D FFT, and drug discovery, where its FP64 performance and high memory bandwidth provide significant advantages.

- Confidential Computing: The H100’s trusted execution environment (TEE) supports secure AI workloads, making it suitable for industries with strict privacy and regulatory requirements.

Choosing Between A100 and H100 PCIe GPU

When to Choose the A100 PCIe GPU

- Budget Constraints: The A100 is more cost-effective, with lower upfront and operational costs, making it suitable for organizations with limited budgets or less demanding workloads.

- Mixed Workloads: Its versatility and MIG capability make it ideal for environments running multiple applications concurrently, such as cloud providers or research institutions.

- Power-Constrained Environments: The lower TDP (300W) reduces cooling and power costs, appealing to data centers with energy limitations.

- Legacy Compatibility: The A100’s mature software stack and widespread availability make it a reliable choice for existing infrastructure.

When to Choose the H100 PCIe GPU

- Cutting-Edge AI Workloads: Organizations training or deploying large-scale LLMs or transformer-based models benefit from the H100’s Transformer Engine and FP8 support, which significantly reduce training and inference times.

- High-Performance HPC: For tasks requiring maximum computational throughput, such as scientific simulations or drug discovery, the H100’s superior FP64 and memory bandwidth deliver unmatched performance.

- Future-Proofing: The H100’s newer architecture and features like PCIe Gen 5 and NVLink 4.0 ensure compatibility with next-generation AI and HPC advancements.

- Cost-Performance Trade-Off: For time-sensitive projects, the H100’s faster processing can offset its higher cost by reducing overall training time and resource usage.

Conclusion

The NVIDIA A100 and H100 PCIe GPUs are both exceptional choices for AI, ML, and HPC workloads, but they cater to different needs. The A100 PCIe offers a cost-effective, versatile solution for organizations with diverse or less intensive workloads, while the H100 PCIe is the superior choice for cutting-edge AI training, inference, and HPC tasks requiring maximum performance. Price-wise, the A100 is more affordable, with retail costs of $10,000–$13,000 and cloud rates of $1.35–$1.80/hour, compared to the H100’s $15,000+ retail and $2.40–$3.50/hour cloud pricing. Performance benchmarks highlight the H100’s 2–4x faster training and 1.5–30x faster inference for LLMs, driven by its Hopper architecture, FP8 support, and higher memory bandwidth.

Ultimately, the choice depends on workload requirements, budget, and infrastructure constraints. For cost-conscious or mixed-use environments, the A100 remains a strong contender. For organizations prioritizing performance and future-proofing for large-scale AI and HPC, the H100 PCIe is the clear winner. Evaluate your specific needs, including model size, computational intensity, and power constraints, to select the GPU that best aligns with your goals.