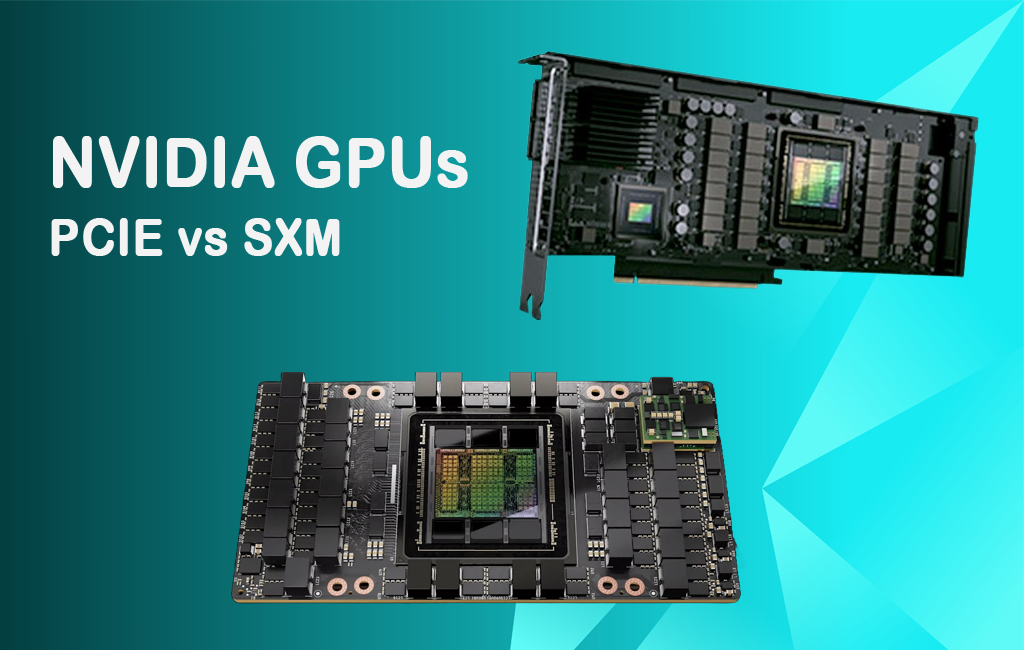

In the rapidly evolving landscape of artificial intelligence (AI) and high-performance computing (HPC), NVIDIA’s GPUs remain at the forefront, driving groundbreaking innovations across industries. As of 2025, NVIDIA continues to advance its GPU technology with the Blackwell architecture, powering models like the H100, H200, and B200. These GPUs are offered in two key form factors: PCIe (Peripheral Component Interconnect Express) and SXM (Server PCI Express Module), each tailored to specific deployment scenarios and performance needs. Understanding the differences between these form factors is critical for optimizing AI training, inference, and HPC workloads. PCIe GPUs provide versatility and compatibility with standard server infrastructures, making them ideal for general-purpose computing, inference, and smaller-scale AI tasks. In contrast, SXM GPUs are engineered for high-density, high-performance environments, offering superior scalability and performance for large-scale AI training and HPC applications. With NVIDIA’s roadmap extending to future architectures like Rubin (slated for 2026), choosing the right form factor today ensures compatibility and scalability for both current and upcoming technologies.

The decision between PCIe and SXM GPUs hinges on the specific demands of your workload and infrastructure. For instance, PCIe GPUs are well-suited for deploying trained AI models or running inference tasks, as they are more cost-effective and easier to integrate into existing systems. However, for training trillion-parameter models or conducting complex scientific simulations, SXM GPUs deliver the necessary power, memory bandwidth, and interconnectivity. As NVIDIA’s GPU ecosystem continues to evolve, staying informed about the latest developments—such as the upcoming Rubin architecture—can help organizations plan for future upgrades while maximizing the performance of their current deployments. This comparison not only highlights the technical differences between PCIe and SXM but also underscores their strategic importance in harnessing the full potential of NVIDIA’s cutting-edge GPUs for 2025 and beyond.

Table of Contents

Key Points

- PCIe GPUs are versatile, cost-effective, and compatible with standard servers, making them suitable for inference, general-purpose computing, and smaller-scale AI tasks.

- SXM GPUs offer superior performance and scalability for large-scale AI training and high-performance computing (HPC), but require specialized infrastructure.

- Performance Trade-offs: SXM GPUs provide higher memory bandwidth and power, while PCIe GPUs are sufficient for less demanding workloads.

- No Controversy: The choice between PCIe and SXM is a technical decision based on workload, infrastructure, and budget, with no significant industry debate.

Comparison of NVIDIA PCIe vs SXM GPUs

1. Form Factor and Integration

- PCIe GPUs:

- Description: PCIe GPUs connect to a motherboard via standard PCIe slots (typically x16), ensuring compatibility with most server and workstation setups.

- Advantages: Easy to install, swap, or upgrade, ideal for modular systems or retrofitting existing infrastructure.

- Example: The NVIDIA H100 PCIe uses PCIe Gen5, offering up to 64 GB/s bandwidth, and fits into standard servers like Dell’s R760xa.

- Use Case: Suitable for businesses with standard data center setups or those needing flexible hardware configurations.

- SXM GPUs:

- Description: SXM GPUs are proprietary socketed modules integrated directly onto NVIDIA’s HGX or DGX server boards, designed for high-density computing.

- Advantages: Optimized for high-performance environments, with direct connections to the system for enhanced bandwidth and power delivery.

- Example: The H100 SXM5 is socketed onto HGX boards, enabling seamless integration in NVIDIA’s DGX systems.

- Use Case: Ideal for hyperscalers or research labs with custom-built, high-performance server infrastructure.

2. Interconnect and Bandwidth

- PCIe GPUs:

- Interconnect: Uses PCIe lanes for GPU-to-GPU and GPU-to-CPU communication, with PCIe Gen5 providing ~64 GB/s bidirectional bandwidth.

- NVLink Option: Can use NVLink Bridges for paired GPUs, offering up to 600 GB/s bidirectional bandwidth, but limited to two GPUs per bridge.

- Performance: Sufficient for workloads with moderate GPU-to-GPU communication needs, such as inference or rendering.

- SXM GPUs:

- Interconnect: Leverages NVIDIA’s NVLink (4th generation in H100/H200, up to 900 GB/s per GPU) and NVSwitch for high-bandwidth GPU-to-GPU communication.

- Scalability: Supports up to 8 GPUs in a single HGX board, with total bandwidth exceeding 7.2 TB/s, acting as one logical GPU.

- Performance: Excels in multi-GPU setups for large-scale AI training or HPC tasks requiring massive data exchange.

3. Performance

- PCIe GPUs:

- Specifications: Lower power envelope (300–400W TDP for H100 PCIe) and reduced memory bandwidth (e.g., H100 PCIe: ~2 TB/s with HBM2e).

- Performance Metrics: Adequate for inference and smaller-scale AI training. For example, H100 PCIe achieves ~98.8 tokens/second for Llama2 7B inference.

- Use Case: Best for deploying trained models or general-purpose computing tasks like rendering or virtualization.

- SXM GPUs:

- Specifications: Higher power (up to 700W TDP for H100 SXM5) and faster memory (e.g., H100 SXM5: ~3.35 TB/s with HBM3).

- Performance Metrics: Superior for large-scale AI training and HPC. For instance, NVIDIA SXM4 A100 outperforms NVIDIA PCIe A100 by nearly 2x in BERT-Large training on MLPerf benchmarks.

- Use Case: Ideal for training trillion-parameter models or running complex scientific simulations.

4. Power and Cooling

- PCIe GPUs:

- Power Consumption: Lower TDP (300–400W), reducing demands on power supplies and cooling systems.

- Cooling: Typically air-cooled, though liquid cooling is supported in some setups, making them easier to manage in standard data centers.

- Example: H100 PCIe can operate in air-cooled servers with standard cooling infrastructure.

- SXM GPUs:

- Power Consumption: Higher TDP (up to 700W), requiring robust power delivery systems.

- Cooling: Often requires liquid cooling due to high power draw and dense configurations, necessitating specialized infrastructure.

- Example: H100 SXM5 in HGX systems typically uses liquid cooling for efficient thermal management.

5. Scalability

- PCIe GPUs:

- Scalability: Scales across servers using InfiniBand or Ethernet, but GPU-to-GPU communication is slower due to PCIe limitations.

- Limitations: NVLink Bridges are limited to paired GPUs, restricting multi-GPU setups.

- Use Case: Best for small- to medium-scale deployments or inference-focused clusters.

- SXM GPUs:

- Scalability: Designed for massive scalability, supporting up to 256 GPUs in NVIDIA SuperPODs using NVLink Switch Systems and InfiniBand.

- Advantages: All GPUs in an HGX board are fully interconnected via NVSwitch, enabling seamless scaling for large AI models.

- Use Case: Preferred by hyperscalers and research labs for training trillion-parameter models.

6. Cost and Availability

- PCIe GPUs:

- Cost: Lower upfront cost due to compatibility with standard servers, reducing infrastructure investment.

- Availability: More widely available and easier to source for general data centers.

- Example: H100 PCIe is cost-effective for startups or inference tasks.

- SXM GPUs:

- Cost: Higher cost due to specialized HGX/DGX systems, advanced cooling, and power requirements.

- Availability: Limited to NVIDIA’s ecosystem, with potential supply constraints for high-demand GPUs like H100 SXM.

- Example: H100 SXM is pricier but justified for large-scale AI training.

7. Use Cases

- PCIe GPUs:

- Inference: Optimized for deploying trained models, such as H100 NVL for LLMs up to 200 billion parameters.

- General-Purpose Computing: Suitable for rendering, virtualization, or smaller AI training tasks.

- Startups/SMBs: Cost-effective for experimentation or modular deployments.

- SXM GPUs:

- AI Training: Ideal for large-scale training of LLMs or foundational models (e.g., H100/H200 SXM for trillion-parameter models).

- HPC: Scientific simulations, big data analytics, or supercomputing.

- Hyperscalers/Research: Multi-GPU clusters for cutting-edge AI or exascale computing.

8. Specific GPU Models (2025 Update)

- H100:

- PCIe: 80 GB HBM2e, ~2 TB/s bandwidth, 300–350W TDP, NVLink Bridge optional (600 GB/s).

- SXM5: 80 GB HBM3, ~3.35 TB/s bandwidth, 700W TDP, NVLink with 900 GB/s.

- H200:

- SXM: 141 GB HBM3e, ~4.8 TB/s bandwidth, ~2x inference speed over H100 SXM for LLMs like Llama2.

- Note: PCIe versions are less common, with SXM being the primary form factor.

- B200:

- SXM6: 192 GB HBM3e, designed for trillion-parameter models, available only in HGX/DGX systems.

Summary Table

| Feature | PCIe | SXM |

|---|---|---|

| Form Factor | Plugs into standard PCIe slots | Socketed onto HGX/DGX boards |

| Interconnect | PCIe Gen5 (~64 GB/s), NVLink Bridge (600 GB/s) | NVLink (900 GB/s), NVSwitch |

| Memory Bandwidth | Lower (e.g., H100: ~2 TB/s HBM2e) | Higher (e.g., H100: ~3.35 TB/s HBM3) |

| Power (TDP) | 300–400W | Up to 700W |

| Cooling | Air or liquid, simpler | Liquid preferred, complex |

| Scalability | Limited to pairs, InfiniBand for clusters | Up to 256 GPUs, NVLink Switch |

| Cost | Lower, standard infrastructure | Higher, specialized systems |

| Use Cases | Inference, general-purpose, startups | AI training, HPC, hyperscalers |

FAQs

- What is the main difference between PCIe and SXM GPUs?

- PCIe GPUs are standard and plug into PCIe slots, while SXM GPUs are socketed directly onto specialized motherboards for higher performance and scalability.

- Which form factor is better for AI training?

- SXM GPUs are better for AI training due to their higher memory bandwidth, power, and scalability, especially for large models.

- Can I use SXM GPUs in my existing server infrastructure?

- Generally, no. SXM GPUs require specific NVIDIA HGX or DGX systems, whereas PCIe GPUs can be used in standard servers.

- What are the power requirements for SXM GPUs?

- SXM GPUs can have higher power requirements, up to 700W per GPU, necessitating advanced cooling and power delivery systems.

- Are there any cost differences between PCIe and SXM GPUs?

- Yes, SXM GPUs and their required systems are typically more expensive than PCIe GPUs, which can be used in more cost-effective standard servers.

- Which GPU form factor should I choose for inference tasks?

- PCIe GPUs are more suitable for inference tasks due to their lower cost, power consumption, and compatibility with standard infrastructures.

- What is NVLink, and how does it differ between PCIe and SXM?

- NVLink is NVIDIA’s high-speed interconnect technology. In PCIe GPUs, it’s used via bridges for paired GPUs, while in SXM, it’s integrated for multi-GPU setups with higher bandwidth.

- Can I mix PCIe and SXM GPUs in the same system?

- No, they are designed for different form factors and cannot be mixed in the same system.

- What are the latest GPU models available in both form factors?

- As of 2025, the H100 is available in both PCIe and SXM5, while the H200 and B200 are primarily in SXM form factors.

- How does the memory differ between PCIe and SXM GPUs?

- SXM GPUs often have faster memory technologies like HBM3, providing higher bandwidth compared to the HBM2e in PCIe GPUs.

Conclusion

Choosing between NVIDIA’s PCIe and SXM GPUs depends on your specific needs, infrastructure, and budget. PCIe GPUs offer flexibility and cost-effectiveness for inference, general-purpose computing, or smaller-scale AI tasks. SXM GPUs, with their superior performance and scalability, are the go-to choice for large-scale AI training, HPC, or hyperscale environments, provided you have the infrastructure to support them. By understanding your workload and infrastructure constraints, you can make an informed decision to optimize your computing environment. For more details on NVIDIA’s GPU offerings, visit NVIDIA’s official site.