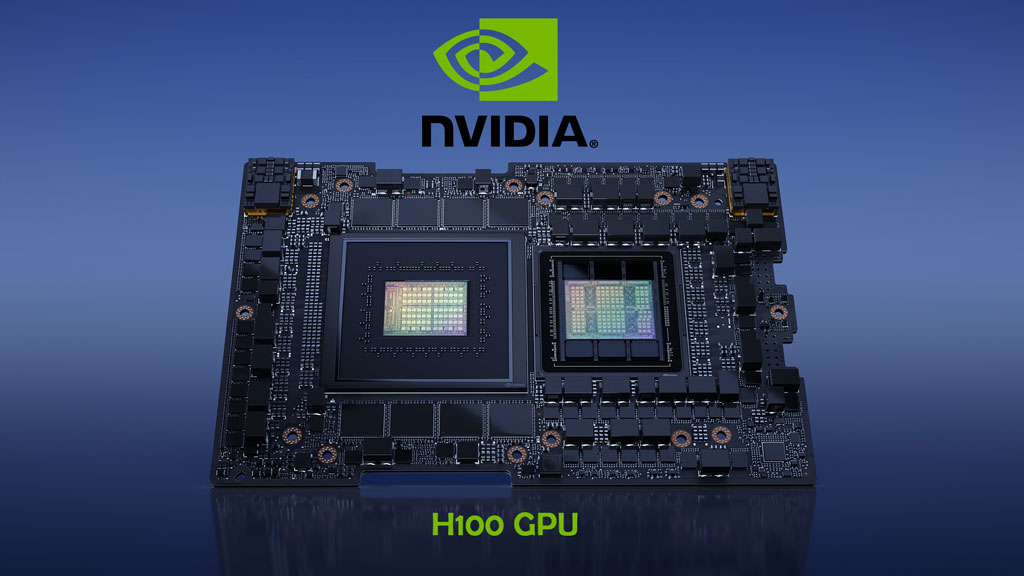

The Nvidia H100 GPU has emerged as a transformative force in the field of artificial intelligence (AI) research and development, fundamentally reshaping how researchers and developers approach complex computational tasks. Built on the cutting-edge Hopper architecture, the H100 is engineered specifically to meet the demands of modern AI workloads, offering unprecedented performance, scalability, and efficiency. This article examines the impact of H100 on AI research and development, exploring its key features, real-world applications, and the broader implications for the future of AI innovation.

Architectural Advancements: The Hopper Architecture and Transformer Engine

At the heart of the H100’s capabilities is the Hopper architecture, which represents a significant leap forward in GPU design. Unlike previous architectures that were primarily optimized for graphics processing, Hopper is purpose-built for AI and machine learning tasks. A standout feature of this architecture is the Transformer Engine, a specialized component designed to accelerate the training and inference of transformer-based models. These models, which are the backbone of many advanced AI applications such as large language models (LLMs) and computer vision systems, require immense computational power to process vast amounts of data efficiently.

The Transformer Engine enables the H100 to handle these workloads with remarkable speed and efficiency, reducing the time required for both training and deploying AI models. This acceleration is critical for researchers working on complex projects, as it allows them to iterate more quickly and explore larger, more sophisticated models that were previously computationally prohibitive.

Precision Flexibility: Optimizing for Speed and Accuracy

Another key innovation of the H100 is its support for multiple precision types, including FP8, FP16, FP32, and FP64. This flexibility allows researchers to tailor their computations to the specific needs of their projects. For instance, lower precision formats like FP8 can significantly speed up processing times while maintaining acceptable levels of accuracy for certain tasks, such as inference in deployed models. On the other hand, higher precision formats like FP64 are essential for tasks that require extreme accuracy, such as scientific simulations or financial modeling.

This ability to balance speed and precision is particularly valuable in AI research, where different stages of model development—such as training, fine-tuning, and inference—often have varying computational requirements. By enabling researchers to optimize their workflows, the H100 enhances both the efficiency and effectiveness of AI development.

Scalability: Powering Exascale Workloads

The H100’s scalability is another factor that sets it apart from previous GPU generations. Through the NVLink Switch System, multiple H100 GPUs can be connected to function as a unified cluster, capable of handling exascale workloads—tasks that require at least one exaflop of computing power. This level of scalability is essential for large-scale AI projects, such as training massive LLMs or simulating complex physical systems, which demand enormous computational resources.

For example, in 2025, it was reported that 75% of data centers utilized NVIDIA H100 GPU clusters equipped with 141GB of HBM3e memory to process terabyte-scale datasets in weeks, a feat that would have taken significantly longer with older hardware. This capability not only accelerates research timelines but also enables the exploration of new frontiers in AI, where the size and complexity of models are no longer constrained by hardware limitations.

Real-World Impact: Accelerating Research and Innovation

The practical impact of the H100 on AI research is already being felt across various industries. One notable example is SES AI, which leveraged NVIDIA’s DGX Cloud—powered by H100 GPUs—to train their Molecular Universe LLM. This project, focused on advancing battery research, saw a dramatic reduction in development time, with years of work compressed into mere months. Such efficiency gains are transformative for researchers who are often constrained by both time and computational resources.

Moreover, the H100’s versatility extends to its compatibility with a wide range of AI frameworks and libraries, including TensorFlow, PyTorch, CUDA, and more. This seamless integration into existing ecosystems makes it easier for researchers to adopt the H100 without overhauling their workflows, thereby accelerating the pace of innovation. The ability to quickly adapt to new hardware while maintaining compatibility with established tools is a significant advantage in the fast-moving field of AI research.

Cost-Effectiveness and Accessibility

Beyond its technical prowess, the H100 is also making high-performance computing more accessible. Companies like Hyperbolic Labs have begun offering on-demand access to H100 GPUs at rates as low as $0.99 per hour, which is up to 70% cheaper than traditional cloud providers. This affordability, coupled with a 99.9% uptime guarantee, democratizes access to cutting-edge computational resources, allowing a broader range of researchers and developers to participate in AI innovation.

This cost-effectiveness is particularly important for smaller research teams, startups, and academic institutions that may not have the budgets of large tech companies but still require powerful hardware to compete in the AI space. By lowering the financial barriers to entry, the H100 is fostering a more inclusive and diverse AI research community.

Memory Capabilities: Handling Massive Datasets

The H100’s memory capabilities are another critical factor in its impact on AI research. With up to 141GB of HBM3e memory, the H100 can efficiently manage terabyte-scale datasets, which are increasingly common in AI applications. This is especially relevant for tasks like training LLMs, which require processing vast amounts of text data, or analyzing complex scientific data in fields like genomics or climate modeling.

For instance, the H100’s memory bandwidth and capacity allow researchers to work with models that have billions or even trillions of parameters, pushing the boundaries of what is possible in AI. This capability not only accelerates current research but also opens the door to new types of AI applications that were previously infeasible due to hardware constraints.

Conclusion: A New Era of AI Research and Development

The Nvidia H100 GPU is more than just a powerful piece of hardware; it is a catalyst for innovation in AI research and development. Its advanced features—ranging from the Transformer Engine and precision flexibility to its scalability and memory capabilities—are enabling researchers to tackle more complex problems and achieve breakthroughs at an unprecedented pace. Moreover, its cost-effectiveness and compatibility with existing tools are democratizing access to high-performance computing, ensuring that the benefits of AI innovation are shared more widely.

As AI continues to evolve, the H100 stands as a testament to the critical role that hardware plays in driving progress. By providing the computational foundation for the next generation of AI models and applications, the H100 is not only shaping the future of AI research but also accelerating the development of technologies that will transform industries and improve lives.